Introduction

AI adoption is everywhere.

From chatbots and copilots to predictive analytics and automation, organisations are investing heavily in AI initiatives. Boards approve budgets, teams launch pilots, and dashboards fill with new insights.

Yet value creation is not.

Despite widespread adoption, many AI initiatives fail to deliver meaningful business outcomes. Projects stall. Pilots never scale. Leaders are left wondering why “working AI” isn’t moving the needle.

The issue isn’t ambition.

And it isn’t a lack of technology.

The real problem lies elsewhere.

The Problem Isn’t Models — It’s Execution

Most AI conversations today revolve around technology.

Leaders debate which large language model to adopt. Teams compare platforms, copilots, and vendors. Roadmaps fill with questions about accuracy, latency, integration, and cost. Organisations ask whether to build custom models or buy off-the-shelf solutions — and how advanced their AI capabilities really are.

These are reasonable questions.

They are just not the most important ones.

What organisations rarely ask is the harder, more consequential question:

How will this actually change decisions and outcomes?

AI can generate insight at unprecedented speed and scale. It can surface patterns, flag risks, and produce recommendations in real time. But insight alone does not create value.

Decisions do.

And AI, on its own, does not make decisions.

It does not own outcomes.

It does not execute.

The gap between insight and action is where most AI initiatives quietly fail.

Why AI Initiatives Fail

Hype Over Use Cases

Many AI initiatives begin with excitement rather than clarity.

A new model launches. A compelling demo circulates. Competitors announce AI features. Suddenly, organisations feel pressure to “do something with AI.” Teams rush to experiment — often without clearly defining what success looks like or which decisions AI is meant to support.

In this environment, “We need AI” becomes the objective.

Instead of anchoring initiatives to specific, repeatable use cases tied to real decisions, teams chase broad promises and impressive demonstrations. The result is technology that looks powerful but lacks direction.

Without a focused use case connected to execution, AI outputs become interesting — but irrelevant.

Misaligned Business Goals

Another common failure point is misalignment with core business objectives.

AI initiatives often sit within innovation teams, data science groups, or IT functions — disconnected from the decisions that drive revenue, cost, risk, or growth.

When AI is not explicitly linked to measurable outcomes, it struggles to gain traction. Leaders see activity — dashboards, pilots, proofs of concept — but not impact.

AI does not fail because it’s inaccurate.

It fails because it’s solving the wrong problem.

If insights do not influence how resources are allocated, priorities are set, or behaviour changes, impact will always be limited.

Too Many Tools, No Decision Ownership

As AI adoption accelerates, tool sprawl becomes inevitable.

Organisations deploy multiple platforms, copilots, analytics tools, and dashboards. Each produces insights and recommendations — yet no one is clearly accountable for acting on them.

Who owns the decision this insight is meant to inform?

Who is responsible for using it — or ignoring it?

When ownership is unclear, insights pile up. Teams review reports, attend meetings, and debate implications — but action stalls. Over time, trust erodes, and AI outputs become optional rather than essential.

The issue is not a lack of intelligence.

It is a lack of execution clarity.

The Execution Gap

AI technology has never been more capable — yet organisational impact remains limited.

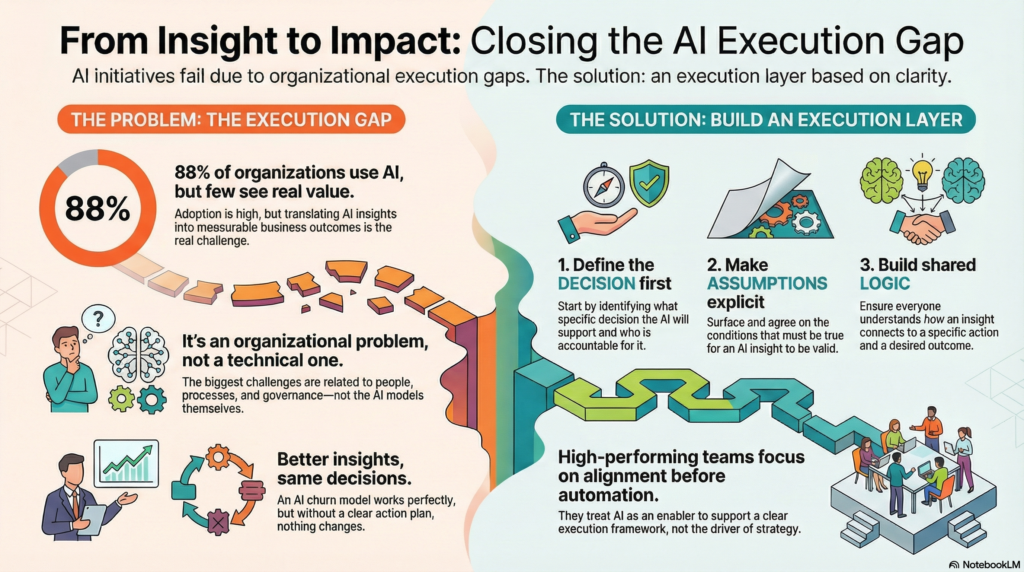

According to McKinsey, around 88% of organisations use AI in at least one business function. Adoption is no longer the constraint.

Execution is.

Across industries, a consistent pattern emerges: AI tools work, but initiatives struggle to translate insight into measurable outcomes. Research from MIT and consulting firms such as BCG shows that most AI challenges are organisational — rooted in people, process, and governance — rather than technology.

This is the execution gap.

What the Execution Gap Looks Like in Practice

Customer insight without action

A B2B SaaS company deploys AI to analyse customer feedback and support tickets. The system accurately flags churn signals and recurring pain points.

But nothing changes.

Product teams lack clarity on which insights should influence the roadmap. Customer success teams don’t know when to intervene. Leadership has no defined process for turning insight into prioritised action.

The AI works.

The organisation doesn’t.

Forecasting that doesn’t influence strategy

A retailer implements AI-driven demand forecasting. Accuracy improves significantly.

Yet inventory decisions continue to follow existing planning cycles and managerial intuition. Forecasts are reviewed — but not trusted enough to override established processes.

Better forecasts.

Same decisions.

Same outcomes.

Why the Gap Persists

The same structural issues appear repeatedly:

- Unclear decision ownership

- Insights arriving outside real decision moments

- Lack of shared logic connecting insight to outcome

Gartner has noted that many AI initiatives are paused or cancelled after pilots — not because models fail, but because organisations struggle to sustain business value.

Insight without execution is not impact.

The Missing Layer: Execution Clarity

AI tools aren’t the problem.

Execution clarity is.

Execution clarity emerges when organisations align three foundational elements: decisions, assumptions, and logic.

Decisions

Every AI initiative should begin with a simple question:

What decision is this meant to support?

In practice, this question is often unanswered. Teams build systems without defining which decisions should change or who owns them.

Execution clarity requires explicitly defining:

- What decisions must be made

- Who owns them

- When and how AI should inform them

If AI does not influence a decision, it will not create value.

Assumptions

Every decision rests on assumptions.

AI systems encode assumptions about data quality, behaviour, and context. When these remain implicit, trust erodes and execution slows.

Making assumptions explicit allows teams to:

- Validate what must be true

- Debate constraints openly

- Build shared confidence

Alignment improves before accuracy does.

Logic

Logic connects insight to outcome.

It explains why a recommendation should lead to a result — and what action should follow. Without shared logic, AI insights feel detached from reality.

AI supports this logic.

It does not define it.

What High-Performing Teams Do Differently

High-performing teams don’t win with better tools.

They win with better execution discipline.

They align before they automate.

They define decision rights clearly.

They invest in shared logic across teams.

AI becomes an accelerator — not a substitute for clarity.

The result:

- Faster decisions

- Shorter debates

- Measurable outcomes

They don’t do more with AI.

They do less — more clearly.

Introducing the Execution Layer

The execution layer sits between strategy and AI.

Strategy sets direction.

AI generates insight.

The execution layer connects the two.

It defines decisions, surfaces assumptions, and standardises logic so insight reliably turns into action. Rather than adding another tool, it creates a shared system for how decisions are made.

This is where AI finally scales.

Conclusion

AI doesn’t fail because it lacks intelligence.

It fails because execution lacks clarity.

Without clear decisions, explicit assumptions, and shared logic, AI initiatives produce activity without impact. The execution layer closes this gap — allowing AI to support decisions rather than float alongside them.

Fix clarity first.

Let technology follow.

That is how AI moves from promise to performance.

If you want to apply this approach to a real white paper, starting with a structured Notion framework can help clarify execution before writing or design. A structured Notion system designed to turn insight into publishable authority content.